Best Practices for Bioprocess Data Integration

Bioprocessing Runs on Data. The Challenge Is Making That Data Useful.

Upstream development, downstream purification, analytics, automation systems, CDMOs, LIMS, batch records, sensors, and bioreactors all generate data every minute. In theory, this should make bioprocessing smarter and faster. In reality, most teams spend more time pulling data together than learning from it.

Different naming conventions, inconsistent units, missing timestamps, disconnected control systems, and siloed CDMO data make it extremely difficult to see the complete picture. Fragmented data slows investigations. It complicates tech transfer. It limits the value of analytics and blocks AI adoption entirely.

This is why leading organizations are investing in bioprocess data integration and building harmonized, AI ready datasets. When data is unified, contextualized, and accessible, teams move faster. They make better decisions. They scale with confidence.

Below are the essential best practices used by high performing bioprocess and manufacturing teams.

Best Practice 1: Start With Data Connectivity Across All Systems

Data integration begins with reliable connectivity. Modern bioprocess environments require continuous data flow from:

- Bioreactors and control systems

- Sensors and PAT tools

- OSIsoft PI and other historians

- LIMS and ELNs

- CDMOs and external partners

- Downstream purification skids

- QC and QA systems

Without direct, automated ingestion, teams fall back on manual file exports that introduce delay and inconsistency. A strong integration strategy ensures that data enters your ecosystem automatically and continuously.

Invert’s platform uses prebuilt connectors to establish this connectivity quickly, allowing teams to unify years of historical data and stream live data with little IT lift.

Best Practice 2: Harmonize Variables, Units, and Metadata Early

Once data is captured, the next step is harmonization. This means standardizing:

- Parameter names

- Units

- Sample identifiers

- Time alignment

- Metadata such as lot, run, product, method, and analyst

Harmonization is one of the most important steps. It transforms scattered data into a coherent dataset that can be compared across runs, bioreactors, sites, and CDMOs.

Without harmonization, even simple comparisons become time consuming. With it, teams gain a consistent and reusable foundation that supports analytics, visualization, and AI modeling.

Best Practice 3: Add Context to Every Data Point

Bioprocess decisions require context. A temperature value means nothing unless you know which run it belongs to, which phase of the process it was captured in, and which operator or control strategy was applied.

Contextualization links each data point to its full process story.

Modern bioprocess data platforms automatically attach context such as:

- Process phase

- Feeding strategy

- Equipment identifiers

- Consumables

- Date and time

- Product and batch

- Set point vs. measured value

This creates a dataset that is not just accurate but meaningful.

Invert’s unified data foundation ensures that every value is tied to consistent metadata. This produces a trustworthy dataset that supports both scientific investigation and regulatory review.

Best Practice 4: Build an AI Ready Data Foundation

AI only works when data is clean, structured, and context rich. Machine learning models depend entirely on well organized data. If the underlying data is inconsistent or incomplete, AI outputs become unreliable.

An AI ready foundation includes:

- Complete lineage and traceability

- Time aligned variables

- Harmonized naming

- Standardized metadata

- No gaps or missing context

- Consistent data types

Organizations that invest in this foundation unlock downstream benefits such as:

- Real time monitoring

- Predictive modeling

- Automated root cause analysis

- Digital twins

- Parametric release tools

Invert’s architecture is designed specifically for AI readiness. It ensures that every dataset meets the requirements needed for reliable analytics and transparent AI.

Best Practice 5: Enable Real Time Visualization and Analytics

Bioprocess runs move quickly. Waiting until the end of a run to interpret results limits the ability to optimize or intervene. With integrated, harmonized data feeding a live intelligence environment, teams can:

- Monitor bioreactors in real time

- Detect anomalies before they escalate

- Compare runs instantly

- Understand variability across scales or clones

- Review full process histories without manual data prep

This shift from delayed analysis to real time insight shortens learning cycles and accelerates scale up.

Invert’s intelligence layer makes this possible without separate dashboards or custom pipelines.

Best Practice 6: Support Tech Transfer With Structured, Reusable Data

Tech transfer is one of the most data intensive activities in bioprocessing. Poor data integration creates unnecessary risk during handoff. Harmonized datasets allow teams to:

- Send consistent, structured datasets to CDMOs

- Maintain ongoing visibility across sites

- Reuse models and templates without rework

- Reduce miscommunication and manual reconciliation

Data integration creates a repeatable, traceable process that accelerates success from development through GMP manufacturing.

Best Practice 7: Prioritize Governance, Traceability, and Compliance

Bioprocess data must meet strict regulatory expectations. Integrated environments need to support:

- Audit trails

- Access controls

- Historical versioning

- Validation and reproducibility

- GxP and Part 11 readiness

Teams should be able to trust every value, every timestamp, and every transformation.

Invert’s platform provides continuous traceability from ingestion through analysis, helping quality and regulatory teams maintain confidence and governance across the full digital ecosystem.

Best Practice 8: Use Integrated Data to Power Continuous Improvement

Once data is unified and contextualized, organizations can unlock higher value use cases.

Examples include:

- Batch comparison dashboards

- Early deviation detection

- Feeding strategy optimization

- Media and supplement evaluation

- Scale up modeling

- Clone performance benchmarking

- Multivariate analysis across years of runs

These insights are only possible with strong data integration.

Why Modern Bioprocess Teams Prioritize Integration

Effective bioprocess data integration delivers real impact.

- Faster time to insight

- Reduced experimental duplication

- Fewer deviations

- More confident decision making

- Shorter scale up cycles

- More predictable yield and quality

Teams with harmonized data spend less time cleaning spreadsheets and more time running experiments that move programs forward.

The Foundation for Intelligent Biomanufacturing

The future of bioprocessing will rely on continuous learning, advanced automation, and AI supported decision making. All of this depends on the quality, context, and accessibility of data.

A strong data foundation is the first step toward predictive models, digital twins, and next generation biomanufacturing.

Invert helps bioprocess teams unify fragmented data into a single, AI ready foundation. With real time visibility, transparent analytics, and reliable traceability, organizations scale faster and operate with greater confidence.

Learn how Invert’s data integration platform helps bioprocess teams build the connected foundation needed for scientific and operational excellence.

.png)

Engineer Blog Series: From Bioprocess to Software with Anthony Quach

Welcome to Invert’s Engineer Blog Series — a behind-the-scenes look at the product and how it’s built.In this post, software engineer Anthony Quach shares how his career in bioprocess development led him into software, and how that experience shapes the engineering decisions behind Invert.

Read More ↗

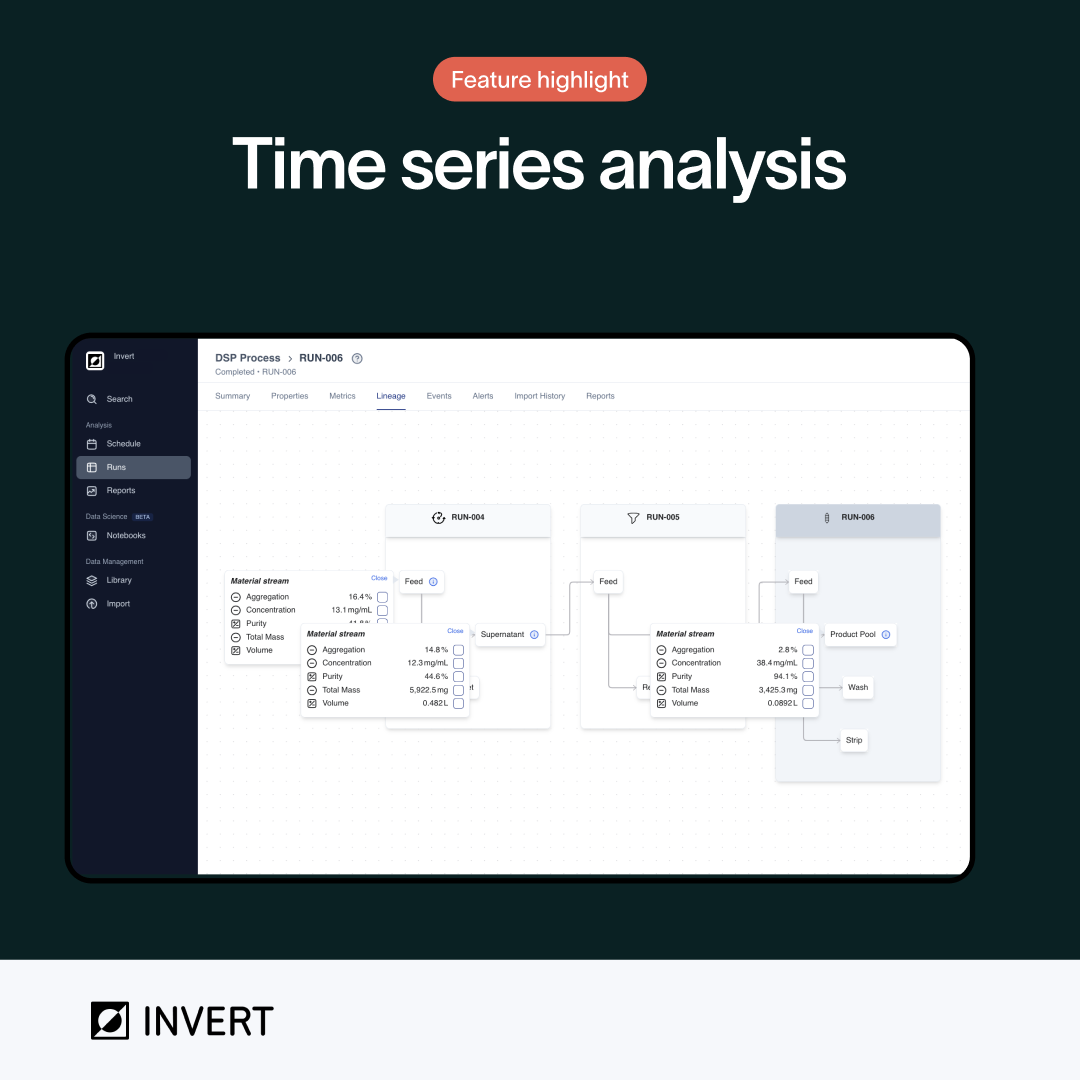

Connecting Shake Flask to Final Product with Lineage Views in Invert

Invert’s lineage view connects products across every unit operation and material transfer throughout the entire process. It acts as a family tree for your product, tracing its origins back through purification, fermentation, and inoculation. Instead of manually tracking down the source of each data point, lineages automatically show material streams as they pass through each step.

Read More ↗

Engineer Blog Series: Security & Compliance with Tiffany Huang

Welcome to Invert's Engineering Blog Series, a behind-the-scenes look into the product and how it's built. For our third post, senior software engineer Tiffany Huang speaks about how trust and security is a foundational principle at Invert, and how we ensure that data is kept secure, private, and compliant with industry regulations.

Read More ↗